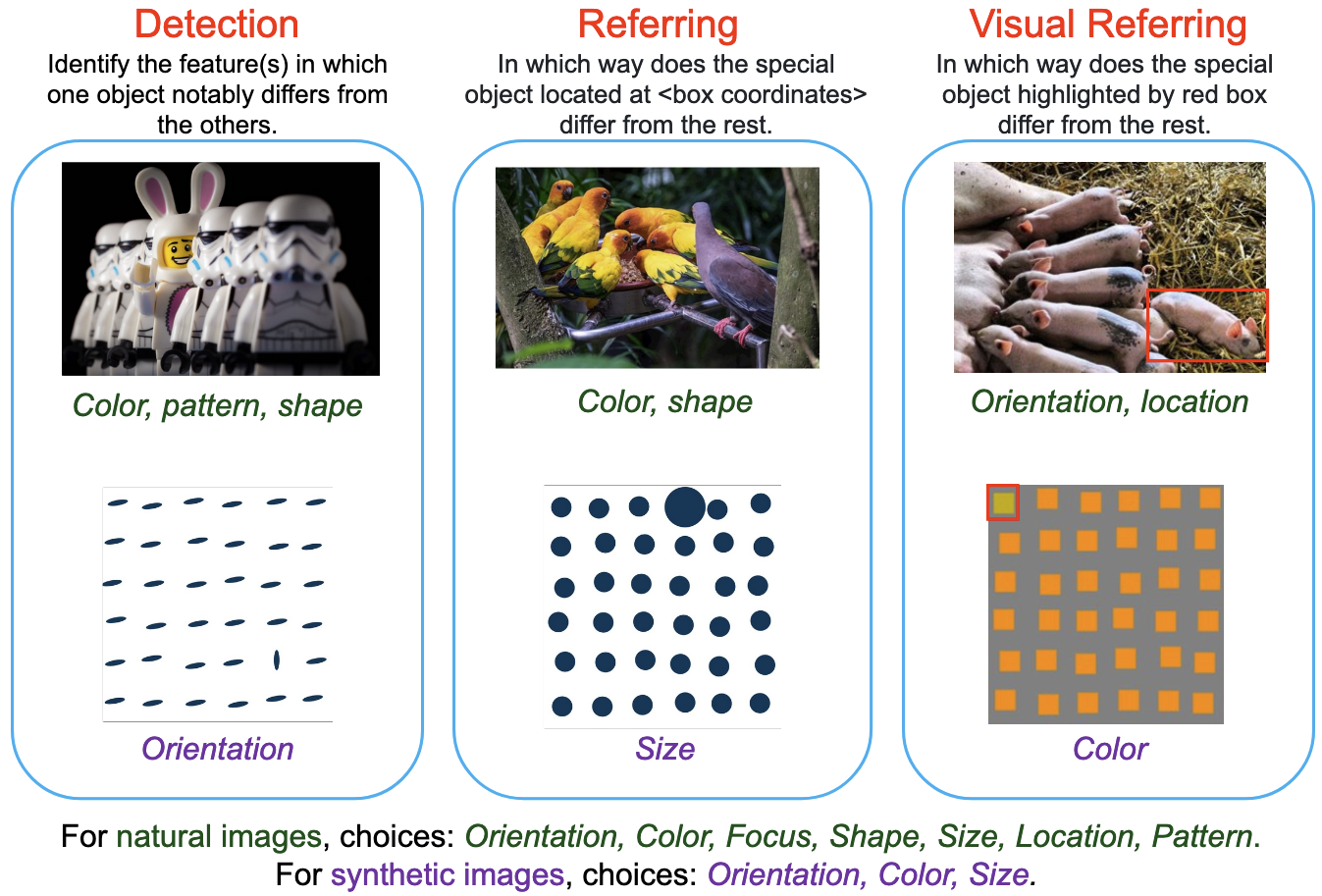

To better evaluate the perceptual capabilities of Large Vision-Language Models (LVLMs), we introduce SalBench, a benchmark designed to test models on visually salient features that are easily recognizable by humans. Unlike existing benchmarks that emphasize high-level reasoning, SalBench targets low-level perceptual tasks such as detecting differences in color, orientation, and size—features fundamental to human visual attention. By incorporating both synthetic and natural image datasets and defining three core tasks—Odd-One-Out Detection, Referring Odd-One-Out, and Visual Referring Odd-One-Out—SalBench exposes significant gaps between human and model performance on tasks that appear deceptively simple.

SalBench is a benchmark built by augmenting the publicly available P3 and O3 datasets with language instructions to evaluate vision-language models on visual saliency tasks. The P3 dataset includes 2,514 synthetic images, each containing a single target differing in color, orientation, or size among distractors. The O3 dataset consists of 2,001 natural images with one salient object that stands out in one or more feature dimensions such as color, shape, or size.

SalBench includes three task types to probe model sensitivity to saliency:

| Model | Shot | Overall Matching | F1 Score | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Overall | Orientation | Color | Size | |||||||||||||

| D | R | VR | D | R | VR | D | R | VR | D | R | VR | D | R | VR | ||

| Claude-sonet | 0 | 86.4 | 89.0 | 87.8 | 86.7 | 90.3 | 87.7 | 83.4 | 87.6 | 85.3 | 94.6 | 95.4 | 95.5 | 82.0 | 87.9 | 82.2 |

| NVLM-D-72B | 0 | 83.4 | 57.9 | 59.8 | 83.2 | 73.7 | 51.7 | 77.4 | 75.1 | 61.8 | 98.0 | 80.2 | 80.4 | 74.1 | 65.7 | 12.7 |

| Molmo-7B | 0 | 71.3 | 45.4 | 30.1 | 67.2 | 38.0 | 28.4 | 40.8 | 62.3 | 34.5 | 95.3 | 23.3 | 15.7 | 69.3 | 28.5 | 22.3 |

| Molmo-72B | 0 | 84.1 | 67.0 | 75.5 | 83.4 | 65.6 | 73.6 | 80.7 | 73.4 | 77.5 | 96.5 | 69.4 | 84.5 | 72.9 | 54.0 | 58.5 |

| LLama3.2-Vision-11B | 0 | 51.4 | 17.6 | 55.5 | 48.7 | 52.4 | 52.4 | 52.6 | 57.9 | 59.7 | 62.7 | 58.6 | 69.7 | 30.9 | 40.7 | 27.8 |

| PaliGemma-3B-448 | 0 | 39.7 | 7.1 | 2.4 | 41.4 | 9.5 | 4.8 | 0.9 | 4.9 | 0.0 | 67.0 | 21.5 | 2.8 | 55.1 | 2.0 | 11.7 |

| Phi3-4B | 0 | 51.3 | 59.0 | 52.1 | 41.2 | 55.3 | 47.2 | 12.4 | 66.3 | 45.9 | 45.3 | 50.5 | 62.8 | 65.9 | 49.1 | 32.9 |

| 3 | 43.4 | 39.0 | 47.1 | 33.5 | 27.1 | 38.6 | 24.0 | 17.3 | 5.8 | 26.5 | 54.9 | 55.0 | 50.0 | 9.1 | 55.0 | |

| 5 | 34.2 | 35.1 | 50.8 | 17.0 | 18.9 | 46.7 | 0.0 | 4.7 | 34.5 | 51.0 | 51.6 | 66.6 | 0.0 | 0.4 | 39.1 | |

| Phi3.5-Vision-3.5B | 0 | 44.0 | 59.9 | 64.9 | 35.0 | 53.7 | 63.6 | 2.1 | 53.7 | 53.7 | 49.2 | 50.9 | 71.3 | 53.7 | 56.6 | 65.9 |

| 3 | 26.7 | 49.8 | 34.7 | 19.5 | 41.0 | 20.8 | 0.0 | 0.5 | 3.0 | 18.2 | 66.7 | 9.9 | 40.3 | 55.8 | 49.5 | |

| 5 | 35.2 | 24.1 | 33.8 | 29.3 | 11.1 | 19.0 | 1.5 | 0.2 | 0.0 | 38.9 | 26.0 | 7.6 | 47.5 | 7.1 | 49.4 | |

| LLava 1.6-7B | 0 | 31.2 | 18.2 | 17.7 | 16.3 | 10.1 | 16.6 | 0.0 | 0.0 | 0.0 | 0.1 | 12.3 | 49.9 | 48.9 | 18.1 | 0.0 |

| 3 | 32.4 | 17.7 | 34.2 | 16.4 | 8.8 | 17.0 | 0.0 | 1.4 | 0.0 | 0.0 | 10.1 | 50.9 | 49.0 | 15.1 | 0.0 | |

| 5 | 32.4 | 19.9 | 34.2 | 16.4 | 9.1 | 17.0 | 0.0 | 0.2 | 0.0 | 0.0 | 18.1 | 50.9 | 49.0 | 9.1 | 0.0 | |

| Idefic2-8B | 0 | 64.5 | 45.2 | 56.0 | 64.3 | 36.6 | 49.5 | 62.9 | 51.1 | 63.8 | 78.1 | 9.7 | 64.1 | 51.9 | 49.2 | 20.5 |

| 3 | 66.9 | 42.6 | 48.7 | 66.3 | 34.2 | 39.5 | 66.6 | 9.7 | 66.3 | 79.4 | 39.8 | 9.5 | 53.0 | 53.1 | 9.7 | |

| 5 | 66.7 | 49.6 | 43.1 | 67.2 | 42.6 | 34.5 | 65.3 | 8.6 | 54.5 | 79.2 | 62.9 | 11.9 | 57.2 | 56.3 | 37.0 | |

| Idefic3-8B | 0 | 40.2 | 58.3 | 35.5 | 28.4 | 52.8 | 19.2 | 24.1 | 54.9 | 2.3 | 54.3 | 51.0 | 49.7 | 6.9 | 52.5 | 5.5 |

| 3 | 50.9 | 35.9 | 50.7 | 40.3 | 20.7 | 40.6 | 0.5 | 0.5 | 3.4 | 62.9 | 52.6 | 63.6 | 57.6 | 8.9 | 54.8 | |

| 5 | 36.3 | 34.5 | 62.9 | 21.4 | 18.1 | 58.3 | 0.0 | 0.2 | 64.3 | 51.8 | 51.3 | 85.7 | 12.3 | 2.7 | 25.0 | |

| VILA-1.5-8B | 0 | 34.2 | 30.4 | 47.5 | 40.0 | 15.8 | 17.0 | 17.6 | 0.0 | 0.5 | 56.3 | 28.8 | 50.5 | 46.1 | 18.7 | 0.0 |

| 3 | 34.2 | 36.9 | 34.2 | 17.0 | 28.8 | 17.0 | 0.0 | 0.0 | 0.5 | 51.0 | 47.6 | 50.5 | 0.0 | 38.5 | 0.0 | |

| 5 | 34.2 | 39.5 | 34.2 | 17.0 | 30.8 | 17.0 | 0.0 | 0.0 | 0.5 | 51.0 | 51.3 | 50.5 | 0.0 | 41.3 | 0.0 | |

| Qwen2-VL-2B | 0 | 30.3 | 34.5 | 34.5 | 26.3 | 20.6 | 20.2 | 14.5 | 5.0 | 10.7 | 5.9 | 7.0 | 1.6 | 58.3 | 49.8 | 49.6 |

| 3 | 35.7 | 35.3 | 32.4 | 23.3 | 21.8 | 16.3 | 0.0 | 0.0 | 0.0 | 17.5 | 15.2 | 0.0 | 53.8 | 50.1 | 49.0 | |

| 5 | 35.3 | 32.6 | 33.1 | 23.8 | 16.5 | 17.7 | 0.0 | 0.0 | 4.1 | 15.2 | 0.7 | 0.0 | 54.6 | 49.0 | 49.3 | |

| Qwen2-VL-7B | 0 | 60.2 | 40.0 | 59.9 | 55.7 | 34.2 | 57.4 | 23.7 | 17.7 | 53.6 | 82.0 | 45.0 | 66.9 | 61.6 | 40.3 | 51.5 |

| 3 | 63.7 | 34.2 | 69.8 | 53.8 | 17.0 | 64.2 | 2.5 | 0.0 | 33.5 | 94.8 | 50.9 | 84.9 | 64.1 | 0.0 | 74.0 | |

| 5 | 64.5 | 34.2 | 73.4 | 54.9 | 17.7 | 72.0 | 4.5 | 0.0 | 56.3 | 95.6 | 50.9 | 84.1 | 64.6 | 2.0 | 75.5 | |

| Qwen2-VL-72B | 0 | 89.1 | 93.6 | 76.0 | 88.8 | 93.6 | 74.7 | 85.2 | 91.3 | 72.5 | 97.2 | 98.3 | 86.0 | 83.9 | 91.1 | 65.7 |

| 3 | 89.3 | 93.1 | 86.1 | 89.3 | 93.1 | 85.9 | 86.7 | 90.4 | 82.9 | 95.8 | 97.9 | 96.2 | 85.5 | 91.1 | 78.8 | |

| 5 | 89.2 | 92.7 | 88.0 | 89.9 | 92.6 | 87.9 | 88.3 | 90.0 | 84.8 | 96.1 | 97.4 | 96.5 | 85.4 | 90.5 | 82.3 | |

| InternVL-4B | 0 | 47.2 | 69.5 | 58.9 | 41.5 | 63.4 | 52.2 | 25.4 | 31.2 | 67.2 | 64.5 | 88.2 | 67.1 | 34.7 | 70.6 | 22.4 |

| 3 | 34.2 | 37.3 | 49.9 | 17.0 | 25.3 | 41.7 | 0.0 | 0.0 | 2.3 | 50.9 | 24.9 | 66.5 | 0.0 | 50.9 | 56.5 | |

| 5 | 34.2 | 48.0 | 58.1 | 17.0 | 39.1 | 52.5 | 0.0 | 0.0 | 61.7 | 50.9 | 61.4 | 76.5 | 0.0 | 55.9 | 19.5 | |

| InternVL-8B | 0 | 65.6 | 74.2 | 37.0 | 58.7 | 71.9 | 23.0 | 66.9 | 50.4 | 9.9 | 95.8 | 93.7 | 52.0 | 13.4 | 71.5 | 7.1 |

| 3 | 60.6 | 61.7 | 66.9 | 52.3 | 51.7 | 64.4 | 7.4 | 1.6 | 44.5 | 87.0 | 90.9 | 85.7 | 62.6 | 62.4 | 63.0 | |

| 5 | 51.0 | 62.5 | 61.6 | 43.9 | 53.7 | 50.5 | 15.6 | 8.6 | 66.5 | 60.4 | 89.2 | 83.6 | 55.6 | 63.3 | 1.4 | |

| GPT-4o | 0 | 89.2 | 88.7 | 74.7 | 89.2 | 88.4 | 73.5 | 86.3 | 85.2 | 73.9 | 97.2 | 96.7 | 94.6 | 84.0 | 83.5 | 52.0 |

| 3 | 87.7 | 88.0 | 86.3 | 88.4 | 87.7 | 86.7 | 85.8 | 84.7 | 82.8 | 97.3 | 95.6 | 95.8 | 82.8 | 82.7 | 81.4 | |

| 5 | 86.0 | 89.0 | 87.1 | 86.0 | 89.1 | 87.4 | 82.8 | 85.3 | 84.4 | 97.6 | 97.9 | 95.7 | 77.5 | 84.1 | 82.0 | |

| Model | Shot | Overall Matching | F1 Score | |||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Overall | Orientation | Color | Size | Focus | Shape | Location | Pattern | |||||||||||||||||||||

| D | R | VR | D | R | VR | D | R | VR | D | R | VR | D | R | VR | D | R | VR | D | R | VR | D | R | VR | D | R | VR | ||

| Claude | 0 | 40.6 | 42.7 | 40.3 | 48.2 | 51.1 | 53.9 | 40.0 | 43.9 | 49.2 | 95.2 | 95.9 | 95.8 | 40.7 | 47.7 | 44.1 | 27.6 | 14.9 | 21.0 | 51.6 | 59.3 | 60.4 | 28.7 | 34.0 | 41.7 | 53.3 | 62.2 | 64.9 |

| NVLM-D-72B | 0 | 26.7 | 35.6 | 21.6 | 36.5 | 42.1 | 37.3 | 36.6 | 35.1 | 28.4 | 90.9 | 93.2 | 89.4 | 28.6 | 36.0 | 34.1 | 8.3 | 16.1 | 12.3 | 41.4 | 49.0 | 42.5 | 14.7 | 18.4 | 8.3 | 34.8 | 47.1 | 45.9 |

| Molmo-72B | 0 | 19.2 | 18.6 | 15.6 | 40.6 | 41.2 | 36.7 | 27.6 | 30.6 | 24.1 | 94.0 | 91.8 | 90.2 | 35.3 | 32.2 | 30.1 | 17.0 | 14.2 | 12.2 | 44.5 | 41.8 | 39.2 | 12.5 | 18.3 | 11.9 | 53.2 | 59.6 | 51.1 |

| Molmo-7B | 0 | 2.5 | 8.9 | 14.6 | 32.0 | 32.4 | 33.0 | 15.2 | 18.6 | 24.2 | 88.5 | 80.1 | 88.2 | 34.8 | 38.8 | 32.7 | 13.5 | 13.7 | 10.8 | 33.2 | 40.1 | 41.0 | 10.0 | 8.0 | 7.7 | 28.8 | 27.0 | 29.9 |

| Llama3.2-Vision-11B | 0 | 2.8 | 0.0 | 0.0 | 32.1 | 29.1 | 29.7 | 17.7 | 17.1 | 27.1 | 90.6 | 89.3 | 85.6 | 31.1 | 33.4 | 18.1 | 12.7 | 11.5 | 9.3 | 37.5 | 44.6 | 45.5 | 8.4 | 8.1 | 22.5 | 20.6 | 0.0 | 0.0 |

| PaliGemma-3B-448 | 0 | 1.4 | 1.0 | 0.7 | 27.6 | 1.2 | 2.3 | 16.5 | 8.1 | 13.6 | 84.3 | 0.7 | 1.6 | 27.2 | 0.0 | 0.0 | 11.6 | 0.0 | 0.0 | 32.5 | 0.0 | 0.0 | 10.4 | 0.0 | 0.0 | 13.4 | 0.0 | 0.0 |

| Phi3-4B | 0 | 7.0 | 4.5 | 6.4 | 32.1 | 32.8 | 32.8 | 2.1 | 2.1 | 1.9 | 91.1 | 87.5 | 88.2 | 25.2 | 29.3 | 26.3 | 13.5 | 11.3 | 14.3 | 40.2 | 42.1 | 41.1 | 7.5 | 7.8 | 7.4 | 45.2 | 43.9 | 49.6 |

| 3 | 0.0 | 1.7 | 3.6 | 34.1 | 32.0 | 32.1 | 15.5 | 14.9 | 12.0 | 89.6 | 88.7 | 88.1 | 30.6 | 29.2 | 23.5 | 9.4 | 10.8 | 11.1 | 40.3 | 38.9 | 39.8 | 7.0 | 7.3 | 8.3 | 46.5 | 34.8 | 42.2 | |

| 5 | 0.0 | 1.2 | 1.3 | 31.1 | 32.1 | 32.2 | 16.6 | 14.3 | 12.7 | 78.7 | 88.9 | 89.1 | 28.9 | 31.2 | 28.7 | 8.8 | 10.8 | 7.1 | 38.3 | 32.1 | 40.7 | 6.6 | 7.8 | 7.7 | 41.3 | 39.1 | 39.8 | |

| Phi3.5-Vision-3.5B | 0 | 12.6 | 2.3 | 7.3 | 23.2 | 27.5 | 27.5 | 1.1 | 22.1 | 12.7 | 91.1 | 86.2 | 88.6 | 29.9 | 22.7 | 22.6 | 4.8 | 11.8 | 9.8 | 9.4 | 37.2 | 39.1 | 1.4 | 7.9 | 7.2 | 24.4 | 4.4 | 27.2 |

| 3 | 0.1 | 3.4 | 9.2 | 23.3 | 28.8 | 28.8 | 16.0 | 15.6 | 13.5 | 58.8 | 89.6 | 90.4 | 26.5 | 24.7 | 25.5 | 9.8 | 9.7 | 11.5 | 31.9 | 38.9 | 39.2 | 6.9 | 7.2 | 7.4 | 12.9 | 15.8 | 28.7 | |

| 5 | 0.5 | 0.4 | 10.3 | 25.2 | 30.8 | 30.8 | 15.2 | 15.6 | 8.7 | 52.5 | 90.2 | 88.5 | 28.5 | 31.5 | 21.2 | 8.9 | 8.8 | 8.3 | 34.1 | 41.1 | 40.9 | 7.3 | 7.8 | 7.0 | 29.6 | 21.3 | 40.5 | |

| LLava 1.6-7B | 0 | 11.1 | 20.4 | 22.8 | 24.6 | 21.4 | 20.8 | 13.4 | 3.3 | 1.1 | 91.1 | 72.4 | 71.9 | 19.3 | 23.4 | 22.8 | 10.9 | 8.5 | 10.7 | 15.8 | 28.6 | 22.9 | 8.9 | 4.5 | 3.6 | 12.6 | 9.1 | 12.4 |

| 3 | 0.0 | 0.1 | 0.2 | 7.1 | 15.2 | 17.8 | 3.6 | 1.1 | 5.2 | 10.4 | 15.2 | 29.3 | 12.2 | 21.5 | 20.8 | 4.3 | 10.3 | 9.1 | 9.5 | 30.7 | 32.7 | 5.4 | 8.4 | 5.5 | 5.4 | 19.4 | 21.9 | |

| 5 | 0.6 | 0.0 | 0.0 | 11.4 | 10.9 | 9.7 | 0.0 | 0.0 | 0.0 | 24.1 | 4.3 | 0.7 | 21.5 | 22.3 | 20.1 | 5.5 | 7.1 | 7.2 | 17.4 | 30.2 | 27.9 | 5.6 | 7.7 | 5.9 | 5.6 | 6.5 | 5.8 | |

| Idefics2-8B | 0 | 37.1 | 5.5 | 4.2 | 19.5 | 29.6 | 33.8 | 7.6 | 15.6 | 11.9 | 91.9 | 72.5 | 85.3 | 19.6 | 30.0 | 32.8 | 0.4 | 11.6 | 16.0 | 9.6 | 46.2 | 44.7 | 5.4 | 7.5 | 7.5 | 4.3 | 23.5 | 38.3 |

| 3 | 8.4 | 24.3 | 8.7 | 21.1 | 28.4 | 31.1 | 13.0 | 8.3 | 11.5 | 62.3 | 88.7 | 84.5 | 17.1 | 11.4 | 21.7 | 13.5 | 12.2 | 10.3 | 25.0 | 40.4 | 40.8 | 5.8 | 7.2 | 8.2 | 11.3 | 30.6 | 40.4 | |

| 5 | 16.1 | 24.2 | 10.5 | 34.7 | 28.3 | 30.9 | 22.5 | 2.3 | 2.1 | 88.0 | 90.5 | 88.4 | 30.0 | 13.6 | 23.7 | 11.8 | 10.0 | 9.9 | 39.2 | 38.1 | 43.0 | 8.6 | 6.9 | 8.6 | 42.9 | 36.6 | 40.8 | |

| Idefics3-8B | 0 | 16.1 | 20.7 | 17.1 | 24.3 | 24.3 | 22.1 | 0.0 | 5.0 | 2.3 | 91.5 | 90.7 | 91.6 | 38.5 | 35.0 | 9.3 | 11.0 | 11.1 | 4.5 | 5.8 | 6.0 | 32.9 | 6.2 | 5.0 | 9.1 | 17.2 | 18.0 | 5.0 |

| 3 | 8.7 | 10.1 | 6.2 | 26.9 | 26.9 | 21.9 | 8.1 | 7.5 | 1.1 | 84.0 | 86.4 | 90.6 | 22.2 | 23.0 | 5.8 | 13.1 | 12.0 | 11.9 | 32.2 | 31.0 | 38.9 | 7.0 | 6.5 | 4.5 | 21.8 | 22.0 | 0.6 | |

| 5 | 4.4 | 9.0 | 5.4 | 22.3 | 26.9 | 20.9 | 5.5 | 8.5 | 0.0 | 65.1 | 88.3 | 90.7 | 15.1 | 17.5 | 3.5 | 15.1 | 14.8 | 6.4 | 27.6 | 28.0 | 39.8 | 5.4 | 8.7 | 5.6 | 22.7 | 22.5 | 0.0 | |

| VILA-1.5-8B | 0 | 3.8 | 0.0 | 0.0 | 23.5 | 13.0 | 15.8 | 0.0 | 6.2 | 0.0 | 85.2 | 19.2 | 27.1 | 31.8 | 21.1 | 27.3 | 1.6 | 3.1 | 8.1 | 35.4 | 34.8 | 36.6 | 8.8 | 4.9 | 9.1 | 1.8 | 2.1 | 2.7 |

| 3 | 1.2 | 0.8 | 0.0 | 25.1 | 28.8 | 28.8 | 16.6 | 11.6 | 6.0 | 68.3 | 72.4 | 79.5 | 22.1 | 31.0 | 28.3 | 9.7 | 10.7 | 9.1 | 24.9 | 35.5 | 36.5 | 8.9 | 7.2 | 7.2 | 25.5 | 22.3 | 36.8 | |

| 5 | 0.4 | 5.0 | 6.0 | 23.2 | 30.8 | 30.8 | 18.2 | 19.0 | 18.0 | 59.5 | 74.6 | 76.4 | 24.7 | 35.0 | 32.0 | 11.6 | 14.1 | 12.0 | 28.6 | 40.0 | 38.0 | 8.3 | 7.0 | 8.0 | 11.8 | 25.0 | 25.0 | |

| Qwen2-VL-2B | 0 | 34.1 | 4.6 | 5.0 | 19.2 | 22.1 | 20.9 | 25.7 | 19.0 | 17.9 | 90.2 | 90.8 | 91.2 | 18.2 | 8.3 | 3.5 | 0.0 | 0.0 | 0.0 | 0.0 | 26.0 | 31.0 | 0.0 | 8.3 | 0.0 | 0.3 | 2.1 | 2.4 |

| 3 | 4.8 | 18.9 | 3.5 | 25.2 | 21.4 | 20.2 | 7.7 | 17.5 | 15.0 | 87.2 | 90.3 | 90.5 | 27.9 | 2.9 | 2.4 | 0.0 | 0.0 | 0.0 | 38.8 | 34.5 | 33.7 | 5.9 | 3.4 | 0.0 | 8.5 | 0.9 | 0.0 | |

| 5 | 2.7 | 26.3 | 25.9 | 25.3 | 21.7 | 20.9 | 15.8 | 19.0 | 18.7 | 90.3 | 90.5 | 90.3 | 28.1 | 11.8 | 6.8 | 0.0 | 0.0 | 0.0 | 34.4 | 27.8 | 24.6 | 3.0 | 2.2 | 0.0 | 5.4 | 0.3 | 0.0 | |

| Qwen2-VL-7B | 0 | 9.1 | 10.2 | 7.0 | 32.5 | 32.5 | 35.2 | 31.0 | 30.1 | 17.5 | 92.1 | 92.0 | 91.5 | 32.3 | 33.5 | 34.5 | 2.4 | 2.7 | 3.8 | 32.1 | 36.4 | 41.9 | 7.5 | 7.9 | 10.5 | 32.3 | 33.2 | 46.7 |

| 3 | 2.8 | 4.0 | 2.1 | 35.6 | 36.0 | 34.1 | 22.4 | 25.3 | 14.7 | 90.4 | 92.5 | 91.1 | 33.1 | 34.5 | 30.4 | 14.7 | 15.0 | 10.7 | 42.8 | 41.0 | 41.3 | 8.4 | 11.2 | 9.0 | 37.8 | 38.6 | 41.6 | |

| 5 | 2.0 | 2.1 | 3.2 | 37.2 | 37.2 | 29.3 | 24.6 | 22.0 | 10.0 | 91.2 | 91.5 | 91.1 | 32.3 | 32.0 | 31.6 | 13.8 | 11.2 | 4.9 | 32.3 | 43.0 | 40.9 | 8.3 | 9.5 | 9.7 | 47.8 | 43.5 | 16.8 | |

| Qwen2-VL-72B | 0 | 14.3 | 16.7 | 14.3 | 41.7 | 44.6 | 41.7 | 23.7 | 30.0 | 23.7 | 93.7 | 94.8 | 93.7 | 39.0 | 42.3 | 39.0 | 12.8 | 19.8 | 12.8 | 47.2 | 51.0 | 47.2 | 13.4 | 13.2 | 13.4 | 61.9 | 61.0 | 61.9 |

| 3 | 28.2 | 34.2 | 28.2 | 43.9 | 43.6 | 43.2 | 24.8 | 28.3 | 24.8 | 93.1 | 94.1 | 93.1 | 38.0 | 39.4 | 37.9 | 18.9 | 16.0 | 18.9 | 48.1 | 53.1 | 48.1 | 23.1 | 17.6 | 23.1 | 56.7 | 57.1 | 56.7 | |

| 5 | 39.5 | 31.0 | 27.0 | 43.9 | 44.9 | 42.3 | 27.0 | 29.7 | 21.6 | 93.7 | 94.7 | 93.1 | 41.9 | 43.9 | 35.8 | 15.5 | 13.1 | 19.8 | 58.2 | 54.2 | 49.3 | 20.2 | 20.0 | 21.2 | 50.8 | 58.8 | 55.4 | |

| InternVL-4B | 0 | 14.9 | 4.6 | 4.5 | 26.6 | 29.8 | 30.7 | 0.0 | 10.5 | 15.4 | 91.4 | 90.3 | 91.4 | 14.3 | 25.3 | 22.4 | 6.3 | 11.7 | 9.3 | 41.8 | 41.0 | 41.0 | 8.0 | 10.7 | 12.2 | 24.6 | 19.4 | 23.4 |

| 3 | 4.1 | 2.2 | 2.3 | 27.7 | 27.4 | 29.5 | 16.3 | 15.8 | 16.3 | 78.0 | 85.2 | 89.3 | 25.7 | 26.5 | 25.0 | 8.8 | 8.8 | 10.0 | 36.7 | 33.9 | 36.1 | 2.6 | 6.5 | 7.6 | 26.0 | 14.9 | 22.0 | |

| 5 | 3.2 | 1.6 | 2.4 | 33.4 | 28.1 | 30.4 | 16.9 | 15.4 | 17.5 | 90.1 | 87.2 | 90.4 | 26.8 | 27.6 | 27.9 | 10.0 | 7.4 | 7.8 | 40.1 | 37.9 | 39.7 | 9.3 | 8.0 | 9.2 | 40.9 | 13.1 | 20.5 | |

| InternVL-8B | 0 | 7.4 | 32.8 | 37.4 | 20.0 | 23.0 | 24.8 | 1.2 | 6.7 | 2.2 | 92.3 | 90.2 | 91.3 | 3.6 | 12.4 | 18.2 | 12.4 | 6.8 | 7.2 | 8.7 | 18.0 | 22.0 | 16.2 | 11.4 | 7.2 | 5.5 | 15.8 | 25.6 |

| 3 | 9.7 | 23.8 | 5.8 | 30.5 | 24.2 | 31.7 | 14.5 | 11.9 | 13.9 | 80.5 | 89.0 | 90.9 | 27.6 | 9.1 | 25.1 | 9.9 | 13.3 | 10.4 | 33.8 | 16.2 | 35.4 | 7.2 | 0.0 | 5.2 | 39.8 | 30.0 | 40.9 | |

| 5 | 7.7 | 23.0 | 6.7 | 27.8 | 25.0 | 31.4 | 15.8 | 6.4 | 11.6 | 79.6 | 90.7 | 91.1 | 26.4 | 11.6 | 27.8 | 10.8 | 6.8 | 7.0 | 28.5 | 22.7 | 37.8 | 7.7 | 2.2 | 4.1 | 25.8 | 34.6 | 40.5 | |

| GPT-4o | 0 | 45.2 | 46.5 | 42.9 | 47.6 | 47.3 | 42.6 | 51.7 | 52.8 | 48.7 | 95.5 | 95.7 | 94.6 | 32.9 | 28.0 | 14.1 | 30.2 | 19.3 | 21.9 | 52.4 | 49.9 | 42.3 | 35.6 | 40.3 | 34.5 | 34.8 | 45.2 | 42.2 |

| 3 | 42.8 | 39.8 | 30.2 | 38.9 | 37.5 | 35.7 | 49.8 | 33.7 | 32.9 | 93.8 | 92.9 | 87.0 | 21.9 | 21.7 | 15.6 | 10.8 | 3.5 | 11.6 | 46.2 | 44.4 | 41.3 | 27.9 | 30.2 | 20.8 | 28.7 | 42.3 | 41.1 | |

| 5 | 43.4 | 42.3 | 30.7 | 41.9 | 39.8 | 38.4 | 46.8 | 42.6 | 40.3 | 94.2 | 94.2 | 87.4 | 28.9 | 19.2 | 14.9 | 10.7 | 9.5 | 20.3 | 47.6 | 44.9 | 40.6 | 29.6 | 31.2 | 26.1 | 35.2 | 37.2 | 39.1 | |

@inproceedings{dahou2025salbench,

title = {SalBench: Vision-Language Models Can't See the Obvious},

author = {Yasser Dahou and Ngoc Dung Huynh and Phuc H. Le-Khac and Wamiq Reyaz Para and Ankit Singh and Sanath Narayan},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

year = {2025},

url = {https://salbench.github.io},

}